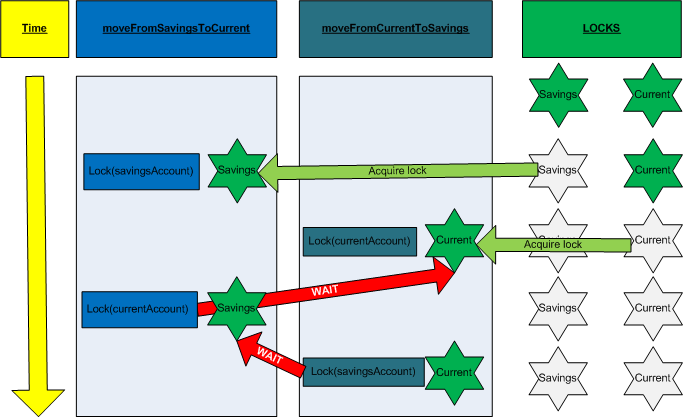

Reading Time: 4 minutesSo last time I left you with the horror of deadlocks. To recap, a deadlock is when two (or more) threads can’t continue because there’s no way that they can get the resources that they need. A simple deadlock can occur when two threads, let’s call them A and B, both require access to two locks, but lock them in different orders.

- Thread A locks resource 1

- Thread B locks resource 2

- Thread A tries to get a lock on resource 2 but can’t until Thread B unlocks it

- Thread B tries to get a lock on resource 1 but can’t until Thread A unlocks it.

Stage 4 of the above can never succeed because Thread A is waiting for Thread B already. They’re mutually locked out, or deadlocked.

One of the easiest things to say about avoiding deadlocks is that you should always lock the resources in the same order. This will indeed prevent deadlocks and it works really well in simple little examples, but when you’re elbows deep in a myriad of objects and there’s all sorts of stuff flying around it can be difficult to ensure that this actually happens. That doesn’t mean abandon the idea, indeed I would suggest attempting to make sure that it’s the case anyway, but it’s not always practical to ensure.

Let’s look at the savings and current account example again. This is how it was…

BankAccount currentAccount;

BankAccount savingsaccount;

void moveFromSavingsToCurrent(int amount)

{

//we need to work with savings, so lock it

lock(savingsAccount)

{

if(savingsAccount.Total>amount)

{

//we can proceed, but we don't want any inconsistencies so we now need

//to lock currentAccount

lock(currentAccount)

{

savingsAccount -= amount;

currentAccount += amount;

}

}

}

}

void moveFromCurrentToSavings(int amount)

{

//we need to work with current, so lock it

lock(currentAccount)

{

if(currentAccount.Total>amount)

{

//we can proceed, but we don't want any inconsistencies so we now need

//to lock savingsAccount

lock(savingsAccount)

{

currentAccount -= amount;

savingsAccount += amount;

}

}

}

}

We can actually fix this one really easily.

BankAccount currentAccount;

BankAccount savingsaccount;

void moveFromSavingsToCurrent(int amount)

{

//we need to work with savings, so lock it

lock(savingsAccount)

{

lock(currentAccount)

{

if(savingsAccount.Total>amount)

{

savingsAccount -= amount;

currentAccount += amount;

}

}

}

}

void moveFromCurrentToSavings(int amount)

{

//we need to work with current, so lock it

lock(savingsAccount)

{

lock(currentAccount)

{

if(currentAccount.Total>amount)

{

currentAccount -= amount;

savingsAccount += amount;

}

}

}

}

By taking the two locks out every time and always in the same order we prevent the deadlock, but now we’re always having to perform both locks, which will be a performance hit. Is there a better way?

Possibly, if we always need to lock both for every operation that we do then we could simply use one lock instead. In fact, simplifying the locking strategy is a great way to reduce the possibility of deadlocks and done well can actually improve performance. After all, the more locks there are, the more chance of a deadlock.

Another trick is to test locks, either with or without a timeout. In order to do this we need to move away from the simple lock statement and explicitly use a mutex or similar). In doing so we break out of the simple c# constructs into the world where we must know what we or doing, or it will hurt us.

Mutex m = new Mutex();

...

m.WaitOne();

try

{

access the required resources...

}

finally

{

m.ReleaseMutex();

}

Here’s the simple construct to using a Mutex. It’s an object in c# so we need to create it. We then call WaitOne() on it when we need to get the lock and we call ReleaseMutex() when we’re done. It’s always good to try to do this in a try…finally because the number of times a Mutex is acquired (with WaitOne()) must match the number of times it’s released (ReleaseMutex()). This may sound silly, but a thread can actually acquire a Mutex more than once, for instance in a recursive function. If an instance of that function threw an exception, it could leave the Mutex with more acquisitions than releases, which would be a problem and would cause an exception to be thrown when another thread attempted to acquire it. A thread must exit leaving all Mutex releases and acquisitions balanced.

Apart from that, it’s just like a lock. Our personal finance example again…

BankAccount currentAccount;

BankAccount savingsaccount;

Mutex accountMutex=new Mutex();

void moveFromSavingsToCurrent(int amount)

{

accountMutex.WaitOne();

try

{

if(savingsAccount.Total>amount)

{

savingsAccount -= amount;

currentAccount += amount;

}

}

finally

{

accountMutex.ReleaseMutex();

}

}

void moveFromCurrentToSavings(int amount)

{

accountMutex.WaitOne();

try

{

if(currentAccount.Total>amount)

{

currentAccount -= amount;

savingsAccount += amount;

}

}

finally

{

accountMutex.ReleaseMutex();

}

}

So what about this waiting for a timeout business? Well there are several overloads of WaitOne() which basically allow you to specify some sort of timeout. WaitOne() then returns a boolean, if the wait is succesful and the Mutex is acquired, WaitOne() will return true. If it fails and times out, WaitOne() will return false. Note that although you can use this to get you out of a deadlock, it’s a bit of a hack. Programming it properly in the first place is a better idea. In fact, the amount of error handling code that’s required to avoid nasty consequences may well be greater than the amount of code required to make it work properly in the first place.

I mentioned in my last also also that there is the phenomenon of the compound deadlock. This is the one that really gets programmers frustrated because a simple deadlock like the one in our savings account example is relatively easy to spot. In reality deadlocks can be long chains of events, the chances of which happening can be millions to one. Code that has run fine for years can suddenly start deadlocking one one machine. Why? Something very subtle with the timing has changed. Maybe an operating system change, maybe a new piece of hardware that just happens to cause the particular sequence of events in the particular order at the particular times. The worst thing is that there’s no stack trace, no debug. Often it can’t even be made to do it in the debug environment, the only place it will do it is at the customer site.

Nest time I’ll talk about semaphores and I may even get on to semaphores.

By the way, I’m intending to tidy these articles up, provide more examples and link them together a bit later.

The Threading Series

Article 1: Threading: Get Up To Speed Now!

Article 2: Thread Safety: Why Local Variables Are Good

Article 3: Threading: Locking and Deadlocks Introduced

Article 4: Combating Simple Deadlocks and Semaphores

Article 5: Semaphores and Message Passing