A really great way of signalling between ships. If your radio has failed, that is.

A little note: this article was written in 2010 – the principles of a semaphore haven’t changed in decades, but the code included is somewhat out of date now.

No, you’ll be glad to hear that Semaphores in computing don’t involve standing in a prominent position and waving your arms around like a lunatic. Semaphores are handy little constructs that are especially of use in producer / consumer scenarios, indeed this is by far the easiest way of explaining them. I find imagining the semaphore to be a person, a supervisor, to be the best way of visualising it. Every time one of the producers has some work it hands it to the supervisor. If there are no workers (consumers) waiting for work, the supervisor stores it until such time as one asks for more. The important thing about a semaphore is that if there are no work items, the supervisor makes the workers wait.

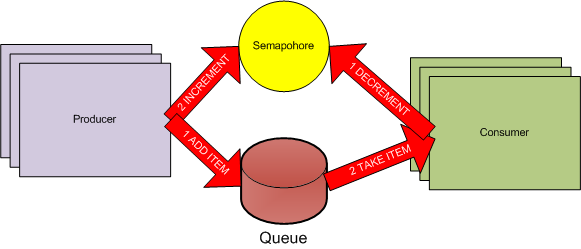

So, to the semaphore itself – they act most like accumulators, you increment and decrement them. Worker threads (consumers) try to decrement the semaphore. If the semaphore is already at zero and a thread tries to decrement it, that thread will wait. Producers increment the semaphore. It’s important to note that if multiple threads are waiting on a semaphore and the semaphore is then incremented by one, only one thread will be released. The rest will remain waiting. There’s a more real world example in the diagram beneath…

In said diagram there are several producers. When a producer has an item it always follows a strict sequence, it adds the item to the queue and then increments the semaphore. The consumer threads do the reverse, they know that if they can decrement the semaphore that they can safely take an item from the queue. Of course, if the semaphore drops to zero then consumer threads will have to wait until a producer increments the semaphore. At this time one consumer thread will be released to process the added work item.

There’s an example of this in action in this zip file (C#, VS2008), extract beneath.

static void Consumer()

{

string item;

int queueLength;

do

{

mySemaphore.WaitOne(); // wait for an item to be available

lock (myQueue) // queue isn't thread safe so we need to lock.

{

item = myQueue.Dequeue();

queueLength = myQueue.Count;

}

if (!String.IsNullOrEmpty(item))

Console.WriteLine(String.Format("C{0:00} DEQUEUE Q{1:00}: {2}",

Thread.CurrentThread.ManagedThreadId, queueLength, item));

Thread.Sleep(250);

} while (!String.IsNullOrEmpty(item)); //we use null to signify end of processing

}

static void Producer()

{

int queueLength;

foreach (string line in Shelley)

{

Thread.Sleep(random.Next(1000));

lock(myQueue) //queue isn't thread safe so we need to lock.

{

myQueue.Enqueue(line);

queueLength = myQueue.Count;

}

mySemaphore.Release(); //let any waiting consumers go.

Console.WriteLine(String.Format("p{0:00} enqueue Q{1:00}: {2} ",

Thread.CurrentThread.ManagedThreadId, queueLength, line));

}

}

Without semaphores, ensuring that the producer / consumer model works efficiently tends either to get very complex, or inefficient.

That’s really all there is to say about semaphores, apart from the fact that you shouldn’t limit your thinking about them to producer / consumer scenarios, they can actually come in surprisingly handy elsewhere.

Message Passing

Message passing is massively important in most of today’s GUI applications, yet it’s surprising the number of people who have no real idea what it’s about.

In order to understand message passing though really you need a basic understanding of what a thread actually is. It’s a procedure. Regardless of wrappers etc. when you create a thread what you actually say to the scheduler is “take this block of code and execute it in parallel with everything else I’m doing”. If you look at the code above you’ll notice two static methods; Producer and Consumer. These form the code block for threads – 2 copies of Producer and 3 of Consumer are run at the same time.

If they were a straight block of code, execution would fall off the end and the thread would end. Both however loop until some condition is met, when they stop.

It’s important if you do use loops in threads that there is some end condition so that they can stop when the program ends.

The main thread in the majority of GUI applications is exactly like this :- it actually runs a simple loop that waits for messages. When it gets a message, it performs some sort of action (usually calling a handler) and then returns to waiting for the next message. In this context a message is likely to be a mouse click, a key press or an instruction to minimise and these come from the operating system, but it could equally well be an instruction that we generate ourselves.

Why do we do it? Well in the case of most GUI apps it ensures that everything that happens to the GUI happens in one thread. If the handlers are well written, then every action starts with the GUI in a stable position and finishes leaving the GUI in a stable position.

We can look at it as a transaction processing system: transactions (messages) are serialised on the queue and each one is executed discretely.

All we need to do this ourselves is a thread that loops on some sort of queue, waiting for a message to arrive and some sort of message structure for it to process. This is an extremely powerful technique, but it isn’t without its hazards. As much as it can be used to aide thread safety by ensuring that access to data and resources is correctly sequenced, it can just as easily ruin thread safety, particularly as the exact time when a message is going to be processed cannot be known, therefore the lifetime and access to any data shared between threads must be very carefully considered.

Windows itself provides a standard framework for message passing that contains two fundamental ways of passing a message – PostMessage and SendMessage. No, the names are not brilliant. PostMessage puts and a message on the queue for the message processing thread and continues running. SendMessage by comparison puts a message on the queue for the message processing thread and then blocks the calling thread until the message has been processed.

In WPF we can look at the Dispatcher framework – that’s an easier to use version of the same thing.

If before I mentioned that last bit you hadn’t realised that Message Passing opens up a whole new world of pain when it comes to deadlocking, you should now. Imagine what happens if a handler in thread A does a SendMessage to thread B, which then tries to lock a resource that thread A has locked.

If you’re programming with message passing it’s more important than ever to ensure what state your data is in at any moment and what has access to it, but you must also keep on top of flow and make sure that deadlock situations can’t arise.

OK, I suspect I’m a little bit of a nerd about this, but I actually find it quite fun to look at designs and work out where the deadlocks can happen, but it’s from years of playing about with this that I understand exactly what is likely to happen and it why.

Next time I’ll start looking at how the constructs provided in C# and the .NET Framework 3.5 to help us with threading.

The Threading Series

Article 1: Threading: Get Up To Speed Now!

Article 2: Thread Safety: Why Local Variables Are Good

Article 3: Threading: Locking and Deadlocks Introduced

Article 4: Combating Simple Deadlocks and Semaphores

Article 5: Semaphores and Message Passing